AI: Why You Should and Shouldn't be Afraid

Artificial Intelligence (AI) is a term packed with meaning, and it means something different to everyone. The rise of LLMs as AI and the pace at which the technology is advancing has triggered some wild speculation about what the future holds. While sensational headlines predict AI’s imminent takeover of jobs or its potential to achieve sentience, a closer examination reveals a complex tool still in the early stages of development. In this post, we will break down some of the common fears surrounding AI, both valid and less so. And let’s be clear, there are good reasons to be concerned about the technology, although they might not be exactly what you think about at first. As when we discuss anything related to moden AI, we should note that this post is being written on June 25, 2024. Many of the limitations and issues highlighted here are likely to evolve rapidly. New models with enhanced capabilities are being released almost monthly, transitioning from text-only to multi-modal systems that can understand live audio and video feeds. This technology is advancing at an unprecedented pace. To stay informed about the latest developments in AI, consider exploring Alta3 Research’s variety of AI courses, which are continually updated with the newest information, models, and techniques.

Common Fears and Misconceptions About AI

AI Will Take All Jobs

One of the most pervasive fears is that AI will lead to mass unemployment by replacing human workers in all sectors. This fear is rooted in the visible advancements in AI and automation technologies that have already begun to change the landscape of various industries. Fortunately the truth is a bit more complicated than that. While the development of robotics and AI will almost certainly to eventually replace some jobs, we aren’t quite there yet.

Pieter den Hamer, VP of research at Gartner, who is focusing on artificial intelligence believes that while “Every job will be impacted by AI, most of that will be more augmentation rather than replacing workers.” This is a relatively pervasive believe, because while AI can automate certain tasks it simply isn’t robust enough to take over for human employees at the moment. From training on new tasks to understanding complex contextual requests, LLMs just aren’t ‘there’ yet.

Furthermore, when AI does start replacing people in the workforce, this does not mean that suddenly all jobs with be taken over. Remember that 60% of jobs today didn’t exist in 1940, and that pace is dramatically accelerating with technology. The World Economic Forum predicts that 65% of elementary school student’s today will be employed doing something that does not yet exist. When AI eventually does come for your job, there will be a whole world of new opportunities for you.

AI Will Gain Sentience and Rebel

Inspired by countless sci-fi movies and dystopian narratives, another common fear is that AI will become self-aware and pose a threat to humanity. This has been exacerbated by a number of ignorant takes claiming models have already gained sentience, including by Google Engineers. This is all good for getting clicks, but it’s not based in the reality of our current situation.

Current AI models, including LLMs like Chat-GPT, are not sentient. They operate based on algorithms and data without any form of consciousness or self-awareness. AI models perform tasks by processing vast amounts of data and making predictions based on patterns in that data. They do not have desires, goals, or awareness. The idea of AI achieving sentience and rebelling is, at least for now, purely speculative and far from the realm of current technological capabilities. This is discussed in greater depth here but at their core, AI models are very complicated statistical models. Any and all responses that indicate sentience are because the models have been trained on vast amount of human text, and we love stories about a rogue AI taking over.

AI Will Be Perfect and Infallible

There is a misconception that AI systems, once implemented, are always accurate and free from errors, leading to over-reliance on these technologies. While these models are truly incredible and seem to be a genius in every field, it’s important to know that they are not designed to be repositories of all human knowledge. They can and will invent facts that sound real if it fits the narrative flow of the prompt and response.

AI systems are only as good as the data they are trained on, the algorithms that drive them, and the prompts that you give. They can and do make mistakes, especially when faced with biased, incomplete, or poor-quality data. Additionally, AI models can generate plausible but incorrect information, as they are designed to produce coherent text rather than factual accuracy. If your prompts are too demanding or too vague, the model ‘hallucinates’ responses. Continuous monitoring, validation, and updates are essential to ensure the reliability and accuracy of AI systems.

The models will continue to get better, and the hallucinations will likely become less common over time. But we do not think they’ll ever fully disappear. According to Sam Altman, head of OpenAI, the hallucinations are actually a good thing.

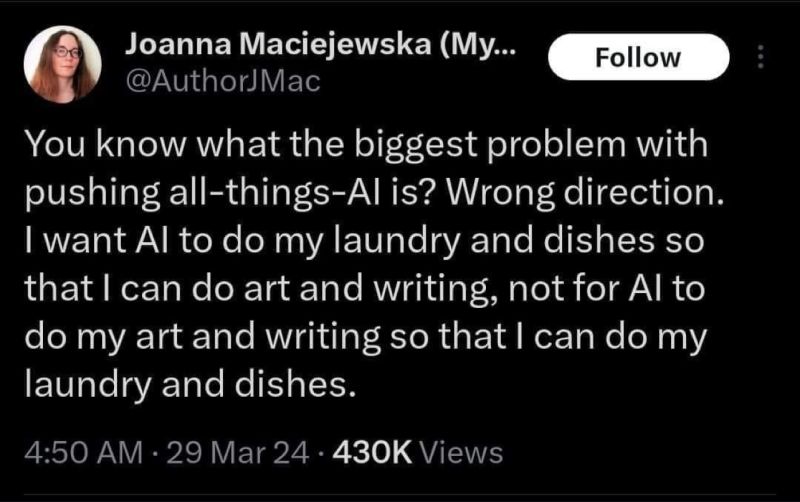

AI Will Replace Human Creativity

Another fear is that AI will encroach on creative fields, rendering human creativity obsolete and devaluing human contributions.

The truth here is actually pretty complicated. With image generation tools capable of creating photo-realistic pictures, video generators getting closer and closer to professional quality ‘footage’, and chatbots churning out news articles, blog posts, and even scientific articles there feels like there is valid concern that humans are replacing themselves with this nascent technology. It can be difficult to disagree when we see things like Toys R’ Us revealing a commercial made 100% from AI.

Fortunately the truth is that these tools work far better as collaborators rather than sole creative directors. Even as the tools progress and get better and better, there is no reasonable reason to think we will be replaced. Maybe in a decade your favorite AI band will open for your favorite human band.

Well-Founded Fears About AI

Next we’re going to discuss some of the reasons you should be afraid of AI and some of the things you can do to combat the reality of AI encroaching in our lives.

Deepfakes and Misinformation

The ability of AI to create realistic but fake content, from falsified news articles to both audio and video deepfakes poses significant challenges for information integrity and security. Right now we are lagging far behind on developing advanced technologies to identify and counteract deepfakes. The current best advice is to research and improve your own digital literacy. Look closely at every image and video you are shown over the coming months. We’ve mentioned checking sources, fact-checking articles, and looking for words like ‘delve’ or ‘meticulous’, but what about images and videos? For now, AI generated imagery and video has some obvious telltale signs:

Look for ‘Artifacts’: Zoom in on details and look for odd shapes, objects that seem to appear or disappear, or smudged out features. Words: This will only be a problem for a few more months or weeks, but many AI generated images will have inconsistent spelling, fonts, and more when using words. Smooth Face: This is again getting harder and harder to discern but face created by AI are often smooth to the point of looking plastic upon close inspection. Look for parallels or matches: Image and video generators often struggle with making parallel lines; fence posts, infrastructure and more will often have strange bends or curves. Earring or gloves will have different details despite being a paired set. Study the backgrounds: Because of the way the models are trained the imagery in the foreground is often far better rendered than the background of images.

And finally, and this is perhaps the most important, reverse image search. Do you think an image might be AI? Run it through a reverse image search and examine the results. This is by far the most reliable method of detection you can use, and going forward this may rapidly become the only viable solution. The audio and video models are improving at a faster rate than chat models. Be prepared.

Bias and Discrimination in AI

One of the most pressing issues in AI development is the potential for these systems to perpetuate and even amplify existing biases present in their training data. AI systems can and have produced biased outcomes. As we rely on these systems more and more there will be mistakes leading to discrimination and unfair treatment across various domains, from hiring practices to criminal justice. This is not a hypothetical concern - we’ve already seen real-world examples of AI bias in action, such as facial recognition systems performing poorly on people of color or hiring algorithms favoring male candidates.

The reality is that bias in AI is a significant issue that requires careful data curation, diverse training sets, and continuous monitoring to mitigate. Developers must be acutely aware of potential biases in their training data and algorithms, actively working to address these issues. Implementing fairness and accountability measures is crucial to ensure that AI systems are more equitable and just.

Ongoing research and collaboration across disciplines, including computer science, ethics, and social sciences, are essential to tackle bias effectively. As AI becomes more integrated into decision-making processes, addressing this issue becomes increasingly critical to prevent the reinforcement and exacerbation of societal inequalities.

AI in Autonomous Weapons

The development of AI for military or policing purposes raises serious ethical and safety concerns, particularly regarding autonomous weapons systems. The prospect of AI-powered weapons that can select and engage targets without meaningful human control is deeply troubling. It raises questions about accountability, the potential for unintended escalation of conflicts, and the fundamental ethics of delegating life-and-death decisions to machines.

There is an urgent need for international regulation and oversight to prevent the development and deployment of AI-driven weapons without human control. Ensuring that AI is used responsibly in military applications is crucial to maintaining global security and ethical standards. This will require cooperation between nations, military experts, AI researchers, and ethicists to establish clear guidelines and potentially even bans on certain types of autonomous weapons.

Lack of Transparency and Accountability

As AI systems become more complex and are deployed in critical decision-making roles, their opacity poses significant challenges. The lack of transparency in AI systems can lead to unaccountable decisions and erode public trust. When an AI makes a decision that affects people’s lives - whether it’s approving a loan, recommending medical treatment, or influencing a parole decision - it’s crucial that we understand how and why that decision was made. At the moment each company that releases a model chooses how and to what extent they discuss their methodology with the public.

At the moment, the only regulation is internal to the company’s themselves. We are left to trust that developers will prioritize creating AI systems that are not only powerful but also comprehensible and trustworthy. This may involve trade-offs between performance and explainability, but it’s a necessary step to ensure that AI systems serve society’s best interests, and we cannot be assured it’s happening.

Government Surveillance

Similar to autonomous weapons systems, the use of AI for surveillance by governments raises significant privacy and ethical concerns that we must grapple with as a society. AI could potentially be used by governments for mass surveillance, infringing on individual privacy and civil liberties. Technologies like facial recognition, when combined with AI’s data processing capabilities, create unprecedented opportunities for monitoring and tracking individuals.

This is not just speculation - we’ve already seen examples of AI-powered surveillance being used in ways that raise serious ethical questions, from China’s social credit system to the use of facial recognition in protests.

The potential for AI-driven surveillance to infringe on privacy rights is a well-founded concern. Governments and regulatory bodies must establish clear guidelines and limitations on the use of AI for surveillance purposes. This includes setting boundaries on data collection, ensuring transparency in how surveillance data is used, and implementing robust oversight mechanisms. At the moment, the EU has some pending legislation on AI and that’s about it. Even that legislation does not directly address many surveillance concerns.

Embracing AI’s Potential for Positive Impact

While there are many reasons for fear and concern, there are also some truly exciting and positive developments happening with AI. And in this humble writer’s opinion, the potential for positive impact on our lives far outweighs the potential concerns. AI technologies can drive innovation, improve efficiency, and solve complex problems across various domains, including:

-

Healthcare: AI can assist in diagnosing diseases, personalizing treatment plans, and managing healthcare resources more effectively. For instance, AI algorithms have shown promise in detecting breast cancer in mammograms with accuracy comparable to human radiologists.

-

Education: AI-powered tools can provide personalized learning experiences, support educators, and enhance educational outcomes for students. A study by Carnegie Learning found that their AI-based math learning software improved student test scores by about 12 percentile points.

-

Environmental Sustainability: AI can contribute to monitoring and mitigating environmental impacts, optimizing resource use, and addressing climate change challenges. Google’s DeepMind AI has been used to reduce energy consumption in data centers by up to 40%, significantly lowering their carbon footprint.

-

Economic Growth: By automating routine tasks and enabling more sophisticated analysis and decision-making, AI can boost productivity and drive economic growth. A report by PwC estimates that AI could contribute up to $15.7 trillion to the global economy by 2030.

Staying Informed and Engaged

As AI technology continues to evolve, staying informed and engaged with the latest developments is crucial. Educational opportunities, such as those offered by Alta3 Research, can help individuals and organizations keep pace with the rapid advancements in AI and develop the skills needed to navigate this dynamic landscape.

By fostering a deeper understanding of AI, addressing valid concerns, and embracing the opportunities it presents, we can move forward with confidence and optimism, leveraging AI to create a better future for all. Stay up to date with all the AI news and advancements by following Alta3 Research’s AI courses, where you can learn about the latest models, techniques, and ethical considerations in AI development.