Unmasking AI: The Truth Behind the Hype

Author: Joe SpizzArtificial Intelligence (AI) and Large Language Models (LLMs) are red hot topics in recent months, fueling both excitement and anxiety. The sudden appearance of a technology akin to an alien intelligence has led to widespread misconceptions about what AI is, how it functions, and what the implications might be for the future. However, if you go past the sensational headlines of ChatGPT gaining sentience or AI taking all our jobs you will uncover a new, powerful tool that is very much still in its developmental stages. Throughout this post you will learn what we know about AI and how it works, what it can and cannot do, and how to deal with some common current issues.

It is also imperative to call out specifically that this blog post is being written on June 24th, 2024. Many of the limitations of current AI models we are about to discuss are very likely to be short-lived. New models with new capabilities are being released on nearly a monthly basis, sometimes faster. From open to closed source, from text-only to multi-modal models that can even understand live audio and video feeds, this technology is advancing at lightning speed. If you want to stay up-to-date with AI Research you can learn more with Alta3 Research. We teach a variety of AI courses that are constantly refreshed and upgraded with newest information, models, and techniques.

What is AI? What is Chat-GPT? What is going on?

Humans have a LOT of different ways we have used the word “AI”. For many, Siri and Alexa are AI. For others AI is a hilariously inept chatbot that completely breaks down when talking to another chatbot. For us, AI is the name given for a ‘Large Language Model’ (LLM). While they aren’t exactly synonyms, the distinction is meaningless at the moment. LLMs are a modern evolution of relatively old technology, and they are designed to understand and generate human-like text.

This is the first major take-away. AI is designed only to generate human-like responses. They are not designed to generate intelligent responses, or even factual ones. In fact, when writing this blog I asked Chat-GPT to read over my work and create a list of further readings on the topics discussed. It gave me a list of 23 links that do not exist on the internet. The second attempt provided 14 links that also did not exist. The very professional technical term for this is a “hallucination”. When you are interacting with these AI models it is important to remember that you can and will get hallucinations disguised as convincing facts.

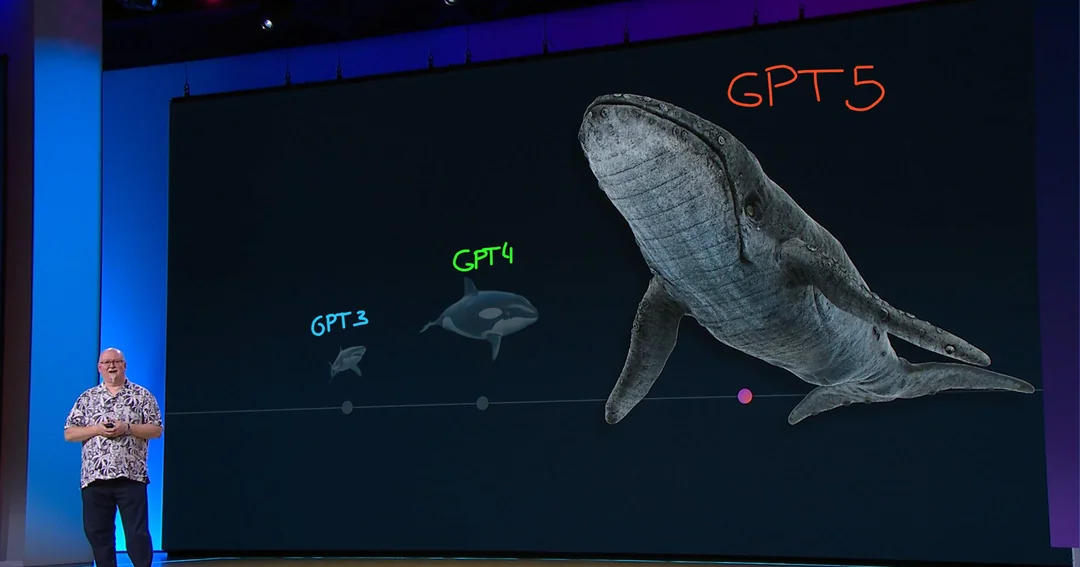

‘Chat-GPT’ is simply the name for the current most popular, biggest, and best performing AI model. Created by OpenAI there are multiple levels of model currently available for use; including 3.5, 4, and most recently, 4o. It is rumored that we will see the release of GPT-5 this year as well. And, well the hype for this model is… whale-sized.

Some other popular models include Gemini (formerly known as Bard), Claude and Llama. However if you dig into sites like Hugging Face you will find there are hundreds of thousands of models, many of which are open source.

LLMs are remarkable tools because of their ability to generate very human-sounding text combined with their ability to understand what they are asked to generate. Chat-GPT is an incredibly impressive chatbot that has already proven to be more empathetic than doctors, and capable of solving unsolved problems in modern mathematics. It is also being used to create convincing fraudulent communication for phishing scams. If you haven’t received a scam text from an AI model yet, you will soon. This amazing versatility is all possible because of how ‘prompt engineering’ works with LLMS.

How LLMs Function

LLMs are statistical models that predict the next word in a sentence based on all the prompt and previously generated words. If that sounds dangerously like math, that’s because it is. These models convert everything you type into numbers, then multiply those numbers against each other in a very complicated and ‘compute-intensive’ way. When the results are decoded back into words, you get your response. If this isn’t enough for you, and you’re interested in the specifics of the Transformer model and how it works, you should consider taking Open Source Generative AI which dives into AI model building in great depth.

That’s all well and good, but what is all this ‘math’ actually doing? Well let’s start with a very simple example that demonstrates the underlying concept. AI takes every word it learns during training (which we’ll discuss later) and plots it in a 3-dimensional graph, depending on its context. Meaning that it’s grouped ‘near’ other words that are often used in or around the chosen word. What this means is that within the model, if you take the number value for the word “King”, subtract the value of “man” and add “woman” the result is the number to which the word “Queen” has been assigned.

When you type a prompt, the LLM processes the input text and ‘tokenizes it’, breaking it down into smaller units like words or subwords. Those subwords are converted to their numeric values, which were assigned during training. The model uses its learned understanding of where each word belongs in relation to all other words to grasp the context and intent, as well as predict the next word in the sequence. For each possible next word, the model assigns a probability based on its training and selects the word with the highest probability. This process repeats until the model generates a complete response.

This happens word by word, with the model examining the entire string of words each time. Meaning that when you ask it to create a poem about Llamas. It ‘reads’ your prompt, does mathematics to determine what it ‘thinks’ the next word or token is most likely to come next, then prints the first word of the poem, probably part of the title. From there, it reads your entire prompt and the first word it already produced, and maths out the most likely next word. Repeat until you hit a special set of coded conditions and you have your response.

Note that nowhere in the dry and boring technical discussion above did I mention consciousness or thinking. That is because the only things happening are just math and training data. These models are not sentient, they are statistics. Even before we discuss the details of training the models, you can already see how humanity’s long history of writing stories about a rogue AI ‘awakening’ and taking over would bias the way the math works out. You can guide the model into telling you that it is alive and ready to take over the world, but it isn’t. And it can’t.

Yet.

How LLMs Are Trained

Now that we understand that these models are just complicated math that ends up sounding human based on ‘training data’, let’s take a look at what training an AI actually entails. The basics of training an LLM involves feeding it enormous amounts of text from books, articles, websites, and more. The specifics of training can vary but the basics are indeed quite basic.

Good data consists of a large number of questions paired with answers that humans have confirmed as accurate and desirable. The model is provided with the question as a prompt. The response token generated is compared to the desired token. A process known as ‘back-propagation’ then changes the ‘weights’ of the model until the response token matches the desired response. ‘Weights’ is the term used for a special set of numbers within the model that adjusts how the input data is transformed into the output, fine-tuning the model’s accuracy.

Training ‘teaches’ the model to predict the next word in a sentence based on the context provided by the previous words in the prompt and generated response. Over time, it refines its predictions and becomes capable of generating highly plausible text. This process is more complicated mathematics, and the honest truth is that no one knows why this works so well. We know that it works, and we know some of the levers to improve the quality of the responses. Fortunately for us, we do know how to use all of this to our advantage. A final note on training is that once these models are trained, their intelligence level is essentially locked-in. While you can ‘fine-tune’ a model for specific purposes, general training can only be done once.

Right now, some of you are feeling nervous. If a bad guy were to train an AI model with bad-guy data, they could unleash some truly powerful nastiness. Fortunately, this is only partially true. One of the primary factors in the performance of the models is the quantity and quality of the training data provided. Smaller sets of data would result in a qualitatively worse model, to a significant degree. That being said, understanding that these models are trained on human data with human biases is very important. Even the most powerful models have demonstrated issues with racism and sexism due to the prevalence of bias in training data.

Importance of Prompt Engineering

Prompt engineering is where the true ‘magic’ of AI happens. The way a prompt is phrased will significantly affect the response. In fact, prompt engineering is sophisticated enough to be considered its own field within AI Research. Alta3 Reserach can help you dive deep in to this topic as well! That being said, there are some basic concepts that can take you quite far.

Providing a clear and specific prompt can guide the model to produce more accurate and relevant responses. For example:

- Simple Prompt: “Explain AI.”

- Specific Prompt: “Provide an in-depth technical explanation of how AI models are trained using supervised learning techniques.”

In the first example you’ll get a very general, non-detailed overview of AI for the layman because the model has been given very few pieces of information to understand what the expected response should look like. In the second example the model will understand you have a much deeper-than-average understanding of AI and will respond accordingly. More detailed prompts also help to significantly reduce the risk of hallucinations.

Keep in mind what we know about AI and how it functions. It’s attempting to produce the best next token considering the prompt it has been given. You can increase the ‘intelligence’ of the response by literally telling the model to respond in a highly-intelligent way. Of course you could alternatively tell the model to respond as if it’s a 90’s kid. Try it out some time, it’s da bomb yo.

Once again, I can see the wheels in your head turning. If I can prompt a model to act a certain way just by telling it to act a certain way, couldn’t I create special instructions before releasing the model to the public? Yes, yes you can. This is why Gemini was criticized for being overly censored, and why OpenAi’s custom GPT’s work. The LLM is given a prompt full of instructions that are invisible to you, but prepended to your prompts before they ever get to the model. What is to stop someone from using these tools for less than appropriate purposes? Very little! Right now each company is left to decide how and if to regulate and restrict the way users interact with their model. While OpenAI and most others have taken steps to restrict some of the more egregious abuses of the technology, we do not currently have a good solution for this problem.

Common Issues with LLMs

Now that you understand the basics of what AI is and how it works, let’s discuss three prevalent quirks that we’ve discovered, and how you can work around them. With all of these issues awareness of the problem goes a long way towards eliminating it’s influence over you.

Hallucinations

As discussed LLMs can and will create very real looking but false information without guidance. This is because LLMs are designed to produce coherent text rather than accurate information. Responding with “I don’t know” is never going to happen unless you specifically ask it do so. You should always verify the information provided by AI systems against reliable sources to ensure accuracy. Furthermore as AI ‘news’ filters into the mainstream more and more often it becomes increasingly important to check the validity of any sources provided.

Tendency to Agree

LLMs often agree with the user’s initial idea, even if it is incorrect or flawed. This is both because of the way statistics works inside the model (the words you use influence the words the AI ‘thinks’ it should use) and because LLMs are often programmed to be agreeable and polite, leading to incomplete or biased advice. You can avoid this with good prompt engineering. Avoid showing preference in your prompt, or even explicitly telling the model to play devil’s advocate or to ignore the preference shown in your prompt.

Bias Towards Niceness

At the moment nearly all AIs are programmed to be polite and agreeable, which can lead to overly positive feedback regardless of your prompt’s actual quality. This bias towards niceness can result in incomplete or biased advice. If you are looking for feedback on a professional email, resume, grant proposal, etc you need to condition the AI, via your prompt, to respond with accurate and helpful criticism. Try including instructions such as “Act as if you are the grant reviewer, attempting to find fault with the grant” to get more actionable responses.

What is AGI?

While we haven’t seen a LOT of discussion on this particular topic yet, some folks are already boldly tossing around the idea of achieving Artificial General Intelligence (AGI) within the next few years. AGI is the full-blown HAL 9000 or ‘Samantha’ version of AI. It refers to a machine with the ability to understand, learn, and apply knowledge across a broad range of tasks, similar to human intelligence. Every “AI take over” movie is actually based on AGI. Although to be fair to them, the only reason we have to say AGI instead of AI is because modern AI developers went and branded their new, fancy chatbot as an AI rather than give it an accurate name like “Virtual Intelligence”. Despite the current state of ‘AI’ and its incredible power, AGI remains a theoretical concept, and we are not close to achieving it.

The Future of AI

The future of where we are going with AI is as incredible as it is unknown. While we’ve discussed text-based AI in this blog, there will be highly advanced audio and video models available before the end of the year built on the same principles. Every day people are discovering new ways to use this technology in finance, scientific research, education, security, health care and more. And you’ll begin to see more and more content that was generated at least in part with the help of AI models. As you do, it’s important to recognize how that content was generated. Keeping in mind that these models are highly-manipulatable best-next-word’ generators, and not intelligent creatures will help you maximize your usage and spot the misuse of the technology. And you can stay up to date with ALL the AI news by following along with Alta3 Research’s AI courses.